How-to: BQL export

Step-by-step practical case - Your first data export

1. Identify your target project

As explained in Getting started, you will need to find your username and Botify project slug which are used to identify the project.

You will also need your API token, which you should be able to find following the instructions on the same page.

In the rest of this example, we will consider these values:

- Username:

botify-team - Project slug:

botify-blog - API token:

123

2. Identify the data you want to export

There are two methods to identify the data you want to export:

Constructing a BQL Query

For our first export, we will retrieve a handful of metrics for each URL of the latest crawl on the project.

First, we need to retrieve the slug of the last analysis. Here you can find instructions to find this slug. For the purpose of the example, let's consider the last crawl occurred on February 5, 2021:

- Analysis slug: 20210205

If we wanted to simply query the data, we would write such a BQL query:

{

"collections": ["crawl.20210205"],

"query": {

"dimensions": [

"url",

"crawl.20210205.depth",

"crawl.20210205.http_code",

"crawl.20210205.byte_size"

],

"metrics": [],

"sort": [1]

}

}This queries the URL, its depth, HTTP code and size in bytes, ordered by the depth in an ascending order.

3. Prepare your export job API call

We now want to write the API call and specify where and how we want the data. For this example, we will go with one of the simplest configurations:

- Backend: direct download - we let Botify store the export for us

- Formatter: CSV - a common format

- Export size: 5000 rows - just to verify that the export works and the result satisfies our needs

The API call will be made to POSThttps://api.botify.com/v1/jobs. Below, you will find the JSON payload of the export and a cURL that can be copy/pasted and replaced with your values.

Use your configurationDon't forget to replace the cURL with your:

- username, in the payload

- project slug, in the payload

- analysis slug, in the BQL collections payload

- API token, in the HTTP headers

curl --location --request POST 'https://api.botify.com/v1/jobs' \

--header 'Authorization: Token 123' \

--header 'Content-Type: application/json' \

--data-raw '{

"job_type": "export",

"payload": {

"username": "botify-team",

"project": "botify-blog",

"connector": "direct_download",

"formatter": "csv",

"export_size": 5000,

"query": {

"collections": ["crawl.20210205"],

"query": {

"dimensions": [

"url",

"crawl.20210205.depth",

"crawl.20210205.http_code",

"crawl.20210205.byte_size"

],

"metrics": [],

"sort": [1]

}

}

}

}'4. Run the API call

Using your favorite tool (command line cURL, Postman, Insomnia, etc.) send this request to our servers. If everything went fine, you will get back a response with a 201 Created and a response body what will look like this:

{

"job_id": 987,

"job_type": "export",

"job_url": "/v1/jobs/987",

"job_status": "CREATED",

"payload": {

"sort_url_id": false,

"connector": "direct_download",

"formatter_config": {

"delimiter": ",",

"print_delimiter": false,

"print_header": false,

"header_format": "verbose"

},

"export_size": 5000,

"query": {

"query": {

"metrics": [],

"sort": [1],

"dimensions": [

"url",

"crawl.20210205.depth",

"crawl.20210205.http_code",

"crawl.20210205.byte_size"

]

},

"collections": ["crawl.20210205"]

},

"formatter": "csv",

"extra_config": {}

},

"results": null,

"date_created": "2021-02-06T16:00:00.000000Z",

"user": "botify-team",

"metadata": null

}The parts that interest us here are:

- job_status: CREATED means that the export job has been created successfully and will be launched soon.

- job_id: this number is the unique identifier of your job in our system. We will use it to verify if our job is finished or not.

The rest of the payload is not of too much interest for us. It is an explicit expression of all default parameters that were used for the export.

5. Fetch the results

Now that we launched an export job, we want to retrieve the results. For that, we only need the job_id that the previous HTTP request returned us.

To fetch the job status, we will make a GEThttps://api.botify.com/v1/jobs/JOB_ID. This call will return the updated information of the job's status.

In our example, here is the associated cURL:

curl --location --request GET 'https://api.botify.com/v1/jobs/987' \

--header 'Authorization: Token 123'If we made rather small export, you should get a response that looks like this:

{

"job_id": 987,

"job_type": "export",

"job_url": "/v1/jobs/987",

"job_status": "DONE",

"results": {

"nb_lines": 5000,

"download_url": "https://d121xa69ioyktv.cloudfront.net/collection_exports/some_url/botify-2021-02-06.csv.gz"

},

"date_created": "2021-02-06T16:00:00.000000Z",

"payload": {

...

},

"user": "botify-team",

"metadata": null

}If the job status is DONE, congratulations 🎉 your first data export completed successfully.

You might notice that the results key is now filled with a download URL. Just click or copy/paste the URL into your browser and you will be able to download the file containing your data.

If the response is not in the status of DONE, it can either be:

- CREATED - we did not start to compute the export yet. Sorry for the inconvenience, it should start anytime soon.

- PROCESSING - the export is being computed, give us a minute and retry the same request again.

- FAILED - something when wrong. The response payload should give you more insights about what went wrong. If you are stuck, don't hesitate to reach out to our support team.

6. Extract your data

Once you download the file, you will need to extract the data from the compressed .gz format. Your Operating System should be able to handle this (for example by right-clicking on the file and choosing Extract Here).

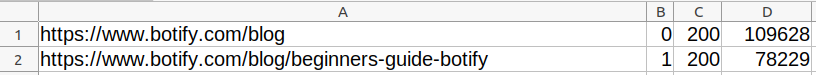

Once extracted, you can open it in your favorite Speadsheet tool (Excel, Google Sheets, LibreOffice Calc, etc.) and voilà ✨ a CSV with 4 columns: the URL, depth, HTTP code and size.

Top of a spreadsheet containing the exported columns.

Updated 5 months ago

See the BQL export reference and how to go further with BQL queries